Introduction

In typical interviews you will rarely encounter problems that will directly ask you to sort an array of integers. You might, however, encounter a question that asks you to sort a linked list. Thus it’s important to become familiar with the techniques used in common sorting algorithms.

Usually sorting creeps its head in when it is an intermediate step in a multi-step process to solve a problem. At other times the problem statement will make clear that you are dealing with a sorted array and you should use that information to help you arrive at an optimal solution. If the input array you are dealing with is already sorted then that’s a big hint you should probably consider binary search before considering a less optimal linear time solution.

O(N) algorithms

Counting Sort

Counting sort works by counting the frequency of each unique item and then outputting the items in order. If an item occurs k times , we'll place it k consecutive times. We will assume that we know the ordering of the unique items. For example if we have items 0 - 6, we know 0 < 1 < 2 < ... 6 or if we have letters a - e, we know that a comes before b, b before c, and so forth. Counting sort is useful if the range of items we need to sort are relatively small. For lowercase characters then we have 26 possibilities and counting sort is a reasonable way to sort our items. However if we need to sort integers then we have more than 4 billion possibilities and counting sort will have a massive memory footprint.

Radix Sort

Radix sort is a successive application of counting sort where if we are sorting integers (our range is 0 - 9) and we individually sort the least significant digits first from right to left until we complete the final counting sort by sorting against the most significant digit.

O(N2) Algorithms

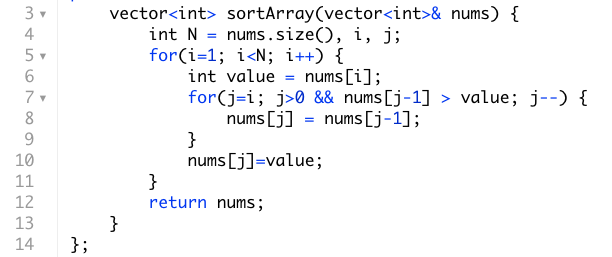

Insertion Sort

Insertion sort processes values one at a time from right to left. Every item to the left of the item that’s being processed is in sorted order. The goal of this algorithm is to insert the new item in the proper position in the left side sorted array of items. Mechanically we do this by finding an insertion position and shifting elements that are greater than our target value one position to the right simultaneously.

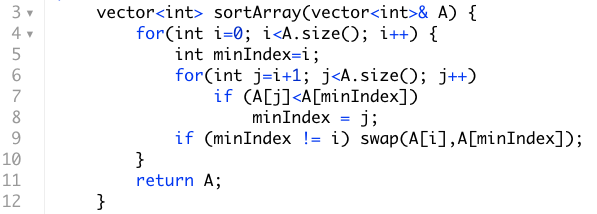

Selection Sort

Selection sort also uses the same principle as insertion sort and keeps a partially sorted array to the left of the item currently being processed. While in insertion sort we continually modify the partially sorted array by inserting a new element somewhere in between its smallest and largest value, in selection sort the partially sorted items are in their final position.

We front load the work and each time try to find the smallest value to the right and adding it as the next item in the partially sorted array to our left. This contrasts with insertion sort where we never examine the items to the right of the value we are processing.

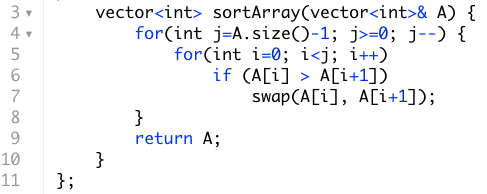

Bubble Sort

Bubble sort will keep a partially sorted array on the far right hand side. Assuming we want to sort in ascending order we will continually bubbling the largest items to the right by finding the largest item seen so far and move it to the right, then the second largest item and so forth. Once we have processed all elements our final array will be sorted. For bubble sort the partial sort is on the right hand side and thus its different from selection sort and insertion sort in this manner. It is similar to the selection sort though in that the partially sorted items in the right are in their final position.

O(NLogN) Algorithms

Merge Sort (Todo)

Quick Sort (Todo)

Heap Sort (Todo)

Other Notes

A sorting algorithm is stable if two elements in the input array with identical keys appear in the same order when the sorted output is processed. Heapsort and Quicksort algorithms are unstable, whereas merge sort and insertion sort are. The key takeaway is stability only matters when the input array contains duplicate values.

Check out the following tool to see a visualization your favorite sorting algorithms.